Bl@ckTo\/\/3r

|

In 2004, Fyodor wrote a chapter for the security fiction best-seller Stealing the Network: How to Own a Continent. His chapter is available free online. In 2005, Syngress released a sequel, named Stealing the Network: How to Own an Identity. The distinguished author list consists of Jeff "Dark Tangent" Moss, Jay Beale, Johnny "Google Hacker" Long, Riley "Caezar" Eller, Raven "Elevator Ninja" Alder, Tom Parker, Timothy "Thor" Mullen, Chris Hurley, Brian Hatch, and Ryan "BlueBoar" Russell. Syngress has generously allowed Fyodor to post his favorite chapter, which is Bl@ckTo\/\/3r (Ch5) by Nmap contributor Brian Hatch. This chapter is full of wry humor and creative security conundrums to keep the experts entertained, while it also offers informative security lessons on the finer points of SSH, SSL, and X Windows authentication and encryption. It stands on its own, so you don't need to read chapters one though four first. Enjoy! |

Bl@ckTo\/\/3r

I have no idea if Charles is a hacker. Or rather, I know he's a hacker; I just don't know if he wears a white hat or a black hat.

Anyone with mad skills is a hacker -- hacker is a good word: it describes an intimate familiarity with how computers work. But it doesn't describe how you apply that knowledge, which is where the old white-hat / black-hat bit comes from. I still prefer using “hacker” and “cracker,” rather than hat color. If you're hacking, you're doing something cool, ingenious, for the purposes of doing it. If you're cracking, then you're trying to get access to resources that aren't yours. Good versus bad. Honorable versus dishonest.

Unfortunately, I am not a hacker. Nor am I a cracker. I've got a lot of Unix knowledge, but it has all been gained the legitimate, more bookish way. No hanging out in IRC channels, no illicit conversations with people who use sexy handles and alter egos, no trading secrets with folks on the other side of the globe. I'm a programmer, and sometimes a systems administrator. I work for the IT department of my alma mater; that's what you do when you are too lazy to go looking for a 'real job' after graduation. I had a work-study job, which turned into full-time employment when I was done with school. After all, there's not much work out there for philosophy majors.

Charles went a different route. Or should I call him Bl@ckTo\/\/3r? Yesterday he was just Charles Keyes. But yesterday I wasn't being held hostage in my own apartment. Our own apartment.

In fact, I don't know if I should even be speaking about him in the present tense.

He vanished a week ago. Not that disappearing without letting me know is unusual for him; he never lets me know anything he does. But this was the first time he's been gone that I've been visited by a gentlemen who gave me a job and locked me in my apartment.

When I got home from work Friday night, the stranger was drinking a Starbucks and studying the photographs on the wall. He seemed completely comfortable; this wasn't the first unannounced house call in his line of work, whatever that is.

He was also efficient. Every time I thought of a question, he was already on his way to answering it. I didn't say a thing the entire time he was here.

“Good evening, Glenn,” he began. “Sorry to startle you, please sit down. No, there's no need to worry; although my arrival may be a surprise, you are in no trouble. I'd prefer to not disclose my affiliation, but suffice it to say I am not from the University, the local police, or the Recording Industry Association of America. What I need is a bit of your help: your roommate has some data stored on his systems, data to which my organization requires access. He came by this data through the course of contract actions on our behalf. Unfortunately, we are currently unable to find your co-tenant, and we need to re-acquire the data.

“He downloaded it to one of his Internet-connected servers, and stopped communicating with us immediately afterward. We do not know his location in the real world, or on the Internet. We did not cause his disappearance; that would not be in our interest. We have attempted to gain access to the server, but he seems to have invested significant time building defenses, which we have unfortunately triggered. The data is completely lost; that is certain.

“We were accustomed to him falling out of communication periodically, so we did not worry until a few days after the data acquisition occurred and we still had not heard from him. However, we have a strong suspicion that he uploaded some or all of the data to servers he kept here.

“In short, we need you to retrieve this data.

“We have network security experts, but what we lack is an understanding of how Bl@ckTo\/\/er thinks.

“His defenses lured our expert down a false path that led to the server wiping out the data quite thoroughly, and we believe that your long acquaintance with him should provide you with better results.

“You will be well compensated for your time on this task, but it will require your undivided attention. To that end, we have set your voice mail message to indicate you are out on short notice until Monday night. At that point, either you will have succeeded, or our opportunity to use the data will have passed. Either way, your participation will be complete.

“We'll provide for all your needs. You need to stay here. Do not contact anyone about what you are doing. We obviously cannot remove your Internet access because it will likely be required as you are working for us, but we will be monitoring it. Do not make us annoyed with you.

“Take some time to absorb the situation before you attempt anything. A clear head will be required.

“If you need to communicate with us, just give us a call, we'll be there.

“Good hunting.”

And out the door he walked.

Not sure what to do, I sat down to think. Actually, to freak out was more like it: this was the first I had ever heard of what sounded like a 'hacker handle' for Charles. I've got to admit, there's nothing sexy about Charles as a name, especially in 'l33t h&x0r' circles.

Maybe a bit of research will get me more in the mood, I thought. At the least, it might take my mind off the implied threat. Won't need the data after Monday, eh? Probably won't need me either, if I fail. Some data that caused Charles to go underground? Get kidnapped? Killed? I had no idea.

Of course, I couldn’t actually trust anything they said. The only thing I knew for sure is that I hadn't seen Charles for a week and, like I said, that's not terribly unusual.

Google, oh Google my friend: Let's see what we can see, I thought.

Charles never told me what he was working on, what he had done, or what he was going to do. Those were uninteresting details. Uninteresting details that I assume provided him with employment of one sort or another. But he needed attention, accolades, someone to tell him that he did cool things. I often felt as though the only reason he came to live in my place was because I humored him, gave him someone safe who he could regale with his cool hacks. He never told me where they were used, or even if they were used. If he discovered a flaw that would let him take over the entire Internet, it would be just as interesting to him as the device driver tweak he wrote to speed up the rate at which he could download the pictures from his camera phone. And he never even took pictures, so what was the bloody point?

It didn't matter; they were both hacks in the traditional sense, and that was what drove him. I had no idea how he used any of them. Not my problem, not my worry.

Well, I thought, I guess this weekend it is my worry. Fuck you, Charles. Bl@ckTo\/\/er. Bastard.

That's right, let's get back to Google.

No results on it at all until my fifth l337 spelling. Blackt0wer - nada. Bl&ckt0wer, zip. Thank goodness Google is case insensitive, or it would have taken even longer.

Looks like Charles has been busy out there: wrote several frequently-referenced Phrack articles, back when it didn't suck. Some low-level packet generation tools. Nice stuff.

Of course, I don't know if that handle really belongs to Charles at all. How much can I trust my captor? Hell, what was my captor's name? ‘The stranger’ doesn’t cut it. Gotta call him something else. How 'bout Agent Smith, from the Matrix? Neo killed him in the end, right? Actually, I'm not sure, the third movie didn't make much sense, actually. And I'm not the uber hacker / cracker, or The One. Delusions of grandeur are not the way to start the weekend. Nevertheless, I thought, Smith it is.

I took stock of the situation:

Charles had probably ten servers in the closet off of the computer room. We each had a desk. His faced the door, with his back to the wall, probably because he was paranoid. Never let me look at what he was doing. When he wanted to show me something, he popped it up on my screen.

That's not a terribly sophisticated trick. X11, the foundation of any Linux graphical environment, has a very simple security model: if a machine can connect to the X11 server -- my screen, in this case -- which typically listens on TCP port 6000, and if it has the correct magic cookie, the remote machine can create a window on the screen. If you have your mouse in the window, it will send events, such as mouse movements, clicks, and key-presses, to the application running on the remote machine.

This is useful when you want to run a graphical app on a remote machine but interact with it on your desktop. A good example is how I run Nessus scans on our University network. The Nessus box, vulture, only has ssh open, so I ssh to it with X11 forwarding. That sets up all the necessary cookies, sets my $DISPLAY variable to the port on vulture where /usr/sbin/sshd is listening, and tunnels everything needed for the Nessus GUI to appear on my desktop. Wonderful little setup. Slow though, so don't try it without compression: if you run ssh -X, don't forget to add -C too.

The problem with X11 is that it's all or nothing: if an application can connect to your display (your X11 server, on your desktop) then it can read any X11 event, or manage any X11 window. It can dump windows (xwd), send input to them (rm -rf /, right into an xterm) or read your keystrokes (xkey). If Charles was able to display stuff on my screen, he could get access to everything I typed, or run new commands on my behalf. Of course, he probably didn’t need to; the only way he should have been able to get an authorized MIT magic cookie was to read or modify my .Xauthority file, and he could only do that if he was able to log in as me, or had root permissions on my desktop.

Neither of these would have been a surprise. Unlike him, I didn’t spent much energy trying to secure my systems from a determined attacker. I knew he could break into anything I have here. Sure, I had a BIOS password that prevented anyone from booting off CD, mounting my disks, and doing anything he pleased. The boot-loader, grub, is password protected, so nobody can boot into single-user mode (which is protected with sulogin and thus requires the root password anyway) or change arguments to the kernel, such as adding "init=/bin/bash" or other trickery.

But he was better than I am, so those barriers were for others. Nothing stopped anyone from pulling out the drive, mounting it in his tower, and modifying it that way.

That's where Charles was far more paranoid than I. We had an extended power outage a few months ago, and the UPS wasn't large enough to keep his desktop powered the whole time, so it shut down. The server room machines are on a bigger UPS, so they lasted through the blackout. When the power was back, it took him about twenty minutes to get his desktop back online, whereas I was up and running in about three. Though he grumbled about all the things he needed to do to bring up his box, he still took it as an opportunity to show his greatness, his security know-how, his paranoia.

"Fail closed, man. Damned inconvenient, but when something bad is afoot, there's nothing better to do than fail closed. I dare anyone to try to get into this box, even with physical access. Where the hell is that black CD case? The one with all the CDs in it?"

This was how he thought. He had about 4000 CDs, all in black 40-slot cases. Some had black Sharpie lines drawn on them. I had a feeling that those CDs had no real data on them at all, they were just there to indicate that he'd found the right CD case. He pulled out a CD that had a piece of clear tape on it, pulled off the tape, and stuck it in his CD drive. As it booted, he checked every connector, every cable, the screws on the case, the tamper-proof stickers, the case lock, everything.

"Custom boot CD. Hard drive doesn't have a boot loader at all. CD requires a passphrase that, when combined with the CPU ID, the NIC's MAC, and other hardware info, is able to decrypt the initrd."

He began to type; it sounded like his passphrase was more than sixty characters. I'd bet that he hashed his passphrase and the hardware bits, so the effective decryption key was probably 128, 256, or 512 bits. Maybe more. But it'd need to be something standard to work with standard cryptographic algorithms. Then again, maybe his passphrase was just the right size, and random enough to fill out a standard key length; I wouldn't put it past him. Once he gave me a throwaway shell account on a server he knew, and the password was absolute gibberish, which he apparently generated with something like this:

$ cat ~/bin/randpw

#!/usr/bin/perl

use strict;

use warnings;

# All printable ascii characters

my @chars = (32..126);

my $num_chars = @chars;

# Passwords must be 50 chars long, unless specified otherwise

my $length=$ARGV[0] || 50;

while (1) {

my $password;

foreach (1..$length) {

$password .= chr($chars[int(rand($num_chars))]);

}

# Password must have lower, upper, numeric, and 'other'

if ( $password =~ /[a-z]/

and $password =~ /[A-Z]/

and $password =~ /[0-9]/

and $password =~ /[^a-zA-Z0-9]/ ) {

print $password, "\n";

exit;

}

}

$ randpw 10

(8;|vf4>7X

$ randpw

]'|ZJ{.iQo3(H4vA&c;Q?[hI8QN9Q@h-^G8$>n^`3I@gQOj/-(

$ randpw

Q(gUfqqKi2II96Km)kO&hUr,`,oL_Ohi)29v&[' Y^Mx{J-i(]

He muttered as he typed the CD boot passphrase (wouldn’t you, if your passwords looked like so much modem line noise?), one of the few times I've ever seen that happen. He must type passwords all day long, but this was the first time I ever saw him think about it. Then again, we hadn't had a power outage for a year, and he was religiously opposed to rebooting Linux machines. Any time I rebooted my desktop, which was only when a kernel security update was required, he called me a Windows administrator, and it wasn't a compliment. How he updated his machines without rebooting I don't know, but I wouldn't put it past him to modify /dev/kmem directly, to patch the holes without ever actually rebooting into a patched kernel. It would seem more efficient to him.

He proceeded to describe some of his precautions: the (decrypted) initrd loaded up custom modules. Apparently he didn't like the default filesystems available with Linux, so he tweaked Reiserfs3, incorporating some of his favorite Reiser4 features and completely changing the layout on disk. Naturally, even that needed to be mounted via an encrypted loopback with another hundred-character passphrase and the use of a USB key fob that went back into a box with 40 identical unlabelled fobs as soon as that step was complete. He pulled out the CD, put a new piece of clear tape on it, and back it went. Twenty minutes of work, just to get his machine booted.

So some folks tried to get access to one of his servers on the Internet. His built-in defenses figured out what they were doing and wiped the server clean, which led them to me. Even if his server hadn’t wiped its own drives, I doubted that they could have found what they were looking for on the drive. He customized things so much that they benefited not only from security through encryption, but also from security through obscurity. His custom Reiser4 filesystem was not built for security reasons, only because he has to tinker with everything he touches. But it did mean that no one could mount it up on their box unless they knew the new inode layout.

I felt overwhelmed. I had to break into these boxes to find some data, without triggering anything. But I did have something those guys didn't: five-plus years of living with the guy who set up the defenses. The Honeynet team's motto is "Know your Enemy," and in that regard I've got a great advantage. Charles may not be my enemy, I thought -- I had no idea what I was doing, or for whom! -- but his defenses were my adversary, and I had a window into how he operated.

The back doorbell rang. I was a bit startled. Should I answer it? I wondered. I didn't know if my captors would consider that a breach of my imposed silence. But no one ever comes to the back door.

I left the computer room, headed through the kitchen, and peered out the back door. Nobody was there. I figured it was safe enough to check; maybe it was the bad guys, and they left a note. I didn't know if my captors were good or bad: were they law enforcement using unorthodox methods? Organized crime? Didn't really matter: anyone keeping me imprisoned in my own house qualified as the bad guys.

I opened the door. There on the mat were two large double pepperoni, green olive, no sauce pizzas, and four two-liter bottles of Mr. Pibb. I laughed: Charles' order. I never saw him eat anything else. When he was working, and he was almost always working, he sat there with one hand on the keyboard, the other hand with the pizza or the Pibb. It was amazing how fast he typed with only one hand. Lots of practice. Guess you get a lot of practice when you stop going to any college classes after your first month.

That was how we met: we were freshman roommates. He was already very skilled in UNIX and networking, but once he had access to the Internet at Ethernet speeds, he didn't do anything else. I don't know if he dropped out of school, technically, but they didn't kick him out of housing. Back then, he knew I was a budding UNIX geek, whereas he was well past the guru stage, so he enjoyed taunting me with his knowledge. Or maybe it was his need to show off, which has always been there. He confided in me all the cool things he could do, because he knew I was never a threat, and he needed to tell someone or he'd burst.

My senior year, he went away and I didn't see him again until the summer after graduation, when he moved into my apartment. He didn't actually ask. He just showed up and took over the small bedroom, and of course the computer room. Installed an AC unit in the closet and UPS units. Got us a T1, and some time later upgraded to something faster, not sure what. He never asked permission.

Early on, I asked how long he was staying and what we were going to do about splitting the rent. He said, "Don't worry about it." Soon the phone bill showed a $5000 credit balance, the cable was suddenly free, and we had every channel. I got a receipt for the full payment for the five year rental agreement on the apartment, which was odd, given that I'd only signed on for a year. A sticky note on my monitor had a username/password for Amazon, which seemed to always have exactly enough gift certificate credit to match my total exactly.

I stopped asking any questions.

I sat with two pizzas that weren't exactly my favorite. I’d never seen Charles call the pizza place; I figured he must have done it online, but he'd never had any delivered when he wasn't here. I decided to give the pizza place a call, to see how they got the order, in case it could help track him down -- I didn't think the bad guys would be angry if they could find Charles, and I really just wanted to hear someone else's voice right now, so I could pretend everything was normal.

“Hello, Glenn. What can we do for you?”

I picked up the phone, but hadn't started looking for the number for the pizza place yet. I hadn't dialed yet�

“Hello? Is this Pizza Time?”

“No. We had that sent to you. We figured that you're supposed to be getting into Bl@ckTo\/\/er's head, and it would be good to immerse yourself in the role. Don't worry; the tab is on us. Enjoy. We're getting some materials together for you, which we'll give you in a while. You should start thinking about your plan of attack. It's starting to get dark out, and we don't want you missing your beauty sleep, nor do we want any sleep-deprived slip-ups. That would make things hard for everyone.”

I remembered our meeting: Smith told me that I should call if I needed to talk, but he never gave me a phone number. They've played with the phone network, I thought, to make my house ring directly to them. I didn't know if they had done some phreaking at the central office, or if they had just rewired the pairs coming out of my house directly. Probably the former, I decided: after their problems with Charles' defenses, I doubted they would want to mess with something here that could possibly be noticed.

Planning, planning: what the hell was my plan? I knew physical access to the servers was right out. The desktop-reboot escapade proved that it would be futile without a team of top-notch cryptographers, and maybe Hans Reiser himself. That, and the fact that the servers were locked in the closet, which was protected with sensors that would shut all of the systems down if the door was opened or if anything moved, which would catch any attempt to break through the wall. I found that out when we had the earthquake up here in Seattle that shook things up. Charles was pissed, but at least he was amused by the video of Bill Gates running for cover; he watched that again and again for weeks, and giggled every time. I assumed there was something he could do to turn off the sensors, but I had no idea what that would be.

I needed to get into the systems while they were on. I needed to find a back door, an access method. I wondered how to think like him: cryptography would be used in everything; obscurity would be used in equal measure, to make things more annoying.

His remote server wiped itself when it saw a threat, which meant he assumed it would have data that should never be recoverable. However, I knew the servers here didn't wipe themselves clean. He had them well protected, but he wanted them as his pristine last-ditch backup copy. It was pretty stupid to keep them here: if someone was after him specifically -- and now somebody was -- that person would know where to go -- and he did. If he had spread things out on servers all over the place, it would have been more robust, and I wouldn’t have been in this jam. Hell, he could have used the Google file system on a bunch of compromised hosts just for fun; that was a hack he hadn't played with, and I bet it would have kept him interested for a week. Until he found out how to make it more robust and obfuscate it to oblivion.

So what was my status? I was effectively locked in at home. The phone was monitored, if it could be used to make outside calls at all. They claimed they were watching my network access; I needed to test that.

I went to hushmail.com and created a new account. I was using HTTPS for everything, so I knew it should all be encrypted. I sent myself an email, which asked the bad guys when they were going to pony up their ‘materials.’ I built about half of the email by copy/pasting letters using the mouse, so that a keystroke logger, either a physical one or an X11 hack, wouldn't help them any.

I waited. Nothing happened. I read the last week of User Friendly; I was behind and needed a laugh. What would Pitr do? He would probably plug a laptop into the switch port where Charles’ desktop was, in hope of having greater access from the VLAN Charles used.

Charles didn’t share the same physical segment of the network in the closet or in the room. I thought that there could be more permissive firewalls rules on Charles’ network, or that perhaps I could sniff traffic from his other servers to get an idea about exactly what they were or weren’t communicating on the wire. A bit of MAC poisoning would allow me to look like the machines I want to monitor, and act as a router for them. But I knew it would be fruitless. Charles would have nothing but cryptographic transactions, so all I’d get would be host and port information, not any of the actual data being transferred. And he probably had the MAC addresses hard-coded on the switch, so ARP poisoning wouldn't work, anyway.

But the main reason it wouldn’t work was that the switch enforced port-based access control using IEEE 802.1x authentication. 802.1x is infrequently used on a wired LAN -- it’s more common on wireless networks -- but it can be used to deny the ability to use layer 2 networking at all prior to authentication.

If I wanted to plug into the port where Charles had his computer, I’d need to unplug his box and plug mine in. As soon as the switch saw the link go away, it would disable the port. Then, when I plugged in, it would send an authentication request using EAP, the Extensible Authentication Protocol. In order for the switch to process my packets at all, I would need to authenticate using Charles’ passphrase.

When I tried to authenticate, the switch would forward my attempt to the authenticator, a Radius server he had in the closet. Based on the user I authenticated as, the radius server would put me on the right VLAN. Which meant that the only way I could get access to his port, in the way he would access it, would be to know his layer 2 passphrase. And probably spoof his MAC address, which I didn’t know. I’d probably need to set up my networking configuration completely blind: I was sure he wouldn’t have a DHCP server, and I bet every port had its own network range, so I wouldn’t even see broadcasts that might help me discover the router’s address.

How depressing: I was sitting there, coming up with a million ways in which my task was impossible, without even trying anything.

I was awakened from my self loathing when I received an email in my personal mailbox. It was PGP encrypted, but not signed, and included all the text of my Hushmail test message. Following that, it read, “We appreciate your test message, and its show of confidence in our ability to monitor you. However, we are employing you to get access to the data in the closet servers, not explore your boundaries. Below are instructions on how to download tcpdump captures from several hosts that seem to be part of a large distributed network which seems to be controlled from your apartment. This may or may not help in accessing the servers at your location.”

It was clear that they could decode even my SSL-encrypted traffic. Not good in general, pretty damned scary if they could do it in near real-time. 128 bit SSL should take even big three letter agencies a week or so, given most estimates. This did not bode well.

If there was one thing I learned from living with Charles, it was that you always need to question your assumptions, especially about security. When you program, you need to assume that the user who is inputting data is a moron and types the wrong thing: a decimal number where an integer is required, a number where a name belongs. Validating all the input and being sure it exactly matches what you require, as opposed to barring what you think is bad, is the way to program securely. It stops the problem of the moron at the keyboard, and also stops the attacker who tries to trick you, say with an SQL injection attack. If you expect a string with just letters, and sanitize the input to match before using it, it’s not possible for an attacker to slip in metacharacters you hadn't thought about that could be used to subvert your queries.

Although it would seem these guys had infinite computing power, that was pretty unlikely. More likely my desktop had been compromised. Perhaps they were watching my X11 session, in the same manner Charles used to display stuff on my screen. I sniffed my own network traffic using tcpdump to see if there was any unexpected traffic, but I knew that wasn’t reliable if they'd installed a kernel module to hide their packets from user-space tools. None of the standard investigative tools helped: no strange connections visible by running netstat -nap, no strange logins via last, nothing helpful.

But I didn’t think I was looking for something I'd be able to find, at least not if these guys were as good as I imagined. They were a step below Charles, but certainly beyond me.

If I wanted to really sniff the network, I needed to snag my laptop, assuming it wasn't compromised as well, then put it on a span port off the switch. I could sniff my desktop from there. Plenty of time to do that later, if I felt the need while my other deadline loomed. I had a different theory.

I tried to log into vulture, my Nessus box at the university, using my ssh keys. I run an ssh agent, a process that you launch when you log in, to which you can add your private keys. Whenever you ssh to a machine, the /usr/bin/ssh program contacts the agent to get a list of keys it has stored in memory. If any key is acceptable to the remote server, the ssh program allows the agent authenticate using that key. This allows a user to have an ssh key’s passphrase protected on disk, but loaded up into the agent and decrypted in memory, which could authenticate without requiring the user to type a passphrase each time ssh connected to a system.

When I started ssh-agent, and when I added keys to it with ssh-add, I never used the -t flag to specify a lifetime. That meant my keys stayed in there forever, until I manually removed them, or until my ssh-agent process died. Had I set a lifetime, I would have to re-add them when that lifetime expired. It was a good setting for users who worried that someone might get onto their machine as themselves or as root. Root can always contact your agent, because root can read any file, including the socket ssh-agent creates in /tmp. Anyone who can communicate with a given agent can use it to authenticate to any server that trusts those keys.

If Smith and his gang had compromised my machine, they could use it to log on to any of my shell accounts. But at least they wouldn’t be able to take the keys with them trivially. The agent can actively log someone in by performing asymmetric cryptography (RSA or DSA algorithms) with the server itself, but it won't ever spit out the decrypted private key. You can't force the agent to output a passphrase-free copy of the key; you'd need to read ssh-agent’s memory and extract it somehow. Unless my captors had that ability, they'd need to log into my machine in order to log into any of my shell accounts via my agent.

At the moment, I was just glad I could avoid typing my actual passwords anywhere they might have been able to get them.

I connected into vulture via ssh without incident, which was actually a surprise. No warnings meant that I was using secure end-to-end crypto, at least theoretically. I was betting on a proxy of some kind, given their ability to read my email. Just to be anal, I checked vulture’s ssh public key, which lived in /etc/ssh/ssh_host_rsa_key.pub, as it does on many systems.

vulture$ cat /etc/ssh/ssh_host_rsa_key.pub ssh-rsa AAAAB3NzaC1yc2EAAAABIwAcu0AjgGBKc2Iu[...]G38= root@vulture

This was the public part of the host key, converted to a human-readable form. When a user connects to an ssh server, the client compares the host key the server presents against the user’s local host key lists, which are in /etc/ssh/ssh_known_hosts and ~/.ssh/known_hosts, using the standard UNIX client. If the keys match, ssh will log the user in without any warnings. If they don’t match, the user gets a security alert, and in some cases may not even be permitted to log in. If the user has no local entry, the client asks permission to add the key presented by the remote host to ~/.ssh/known_hosts.

I compared vulture’s real key, which I had just printed, to the value I had in my local and global cache files:

desktop$ grep vulture ~/.ssh/known_hosts /etc/ssh/ssh_known_hosts /etc/ssh/ssh_known_hosts: vulture ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAIEAvPCH9IMinzL[...]0=

The two entries should have matched, but they didn't. After the first 23 characters, they weren’t even close. Another idea popped into my head: Dug Song's dsniff had an ssh man-in-the-middle attack, but it would always cause clients to generate host key errors when they attempted to log into a machine for which the user had already accepted the host key earlier: the keys would never match. But someone else had come up with a tool that generated keys with fingerprints that looked similar to a cracker-supplied fingerprint. The theory was that most people only looked at part of the fingerprint, and if it looked close enough, they'd accept the compromised key.

Checking the fingerprints of vulture's host key and the one in my known_hosts file, I could see they were similar but not quite identical:

# Find the fingerprint of the host key on vulture vulture$ ssh-keygen -l -f /etc/ssh/ssh_host_rsa_key.pub 1024 cb:b9:6d:10:de:54:01:ea:92:1e:d4:ff:15:ad:e9:fb vulture # Copy just the vulture key from my local file into a new file desktop$ grep vulture /etc/ssh/ssh_known_hosts > /tmp/vulture.key # find the fingerprint of that key desktop$ ssh-keygen -l -f /tmp/vulture.key 1024 cb:b8:6d:0e:be:c5:12:ae:8e:ee:f7:1f:ab:6d:e9:fb vulture

So what was going on? I bet they had a transparent crypto-aware proxy of some kind between me and the Internet. Probably between me and the closet, if they could manage. If I made a TCP connection, the proxy would pick it up and connect to the actual target. If that target looked like an ssh server, it would generate a key that had a similar fingerprint for use with this session. It acted as an ssh client to the server, and an ssh server to me. When they compromised my desktop, they must have replaced the /etc/ssh/ssh_known_hosts entries with new ones they had pre-generated for the proxy. No secure ssh for me; it would all be intercepted.

SSL was probably even easier for them to intercept. I checked the X.509 certificate chain of my connection to that Hushmail account:

desktop$ openssl s_client -verify 0 -host www.hushmail.com -port 443 </dev/null >/dev/null depth=1 /C=ZA/O=Thawte Consulting (Pty) Ltd./CN=thawte SGC CA verify return:1 depth=0 /C=CA/2.5.4.17=V6G 1T1/ST=BC/L=Vancouver/2.5.4.9=Suite 203 455 Granville St. /O=Hush Communications Canada, Inc./OU=Issued through Hush Communications Canada, Inc.E-PKI Manager/OU=PremiumSSL/CN=www.hushmail.com verify return:1 DONE

Here were the results of performing an SSL certificate verification. The openssl s_client command opened up a TCP socket to www.hushmail.com on port 443, then read and verified the complete certificate chain that the server presented. By piping to /dev/null, I stripped out a lot of s_client certificate noise. By having it read from </dev/null, I convinced s_client to ‘hang up’, rather than wait for me to actually send a GET request to the Web server.

What bothered me was that the certificate chain was not a chain at all: it was composed of one server certificate and one root certificate. Usually you would have at least one intermediate certificate. Back last week, before my network had been taken over, it would have looked more like this:

desktop$ openssl s_client -verify -showcerts -host www.hushmail.com -port 443 </dev/null >/dev/null depth=2 /C=US/O=GTE Corporation/OU=GTE CyberTrust Solutions, Inc./CN=GTE CyberTrust Global Root verify return:1 depth=1 /C=GB/O=Comodo Limited/OU=Comodo Trust Network/OU=Terms and Conditions of use: http://www.comodo.net/repository/OU=(c)2002 Comodo Limited/CN=Comodo Class 3 Security Services CA verify return:1 depth=0 /C=CA/2.5.4.17=V6G 1T1/ST=BC/L=Vancouver/2.5.4.9=Suite 203 455 Granville St. /O=Hush Communications Canada, Inc./OU=Issued through Hush Communications Canada, Inc. E-PKI Manager/OU=PremiumSSL/CN=www.hushmail.com verify return:1 DONE

In this case, depth 0, the Web server itself, was signed by depth 1, the intermediate CA, a company named Comodo, and Comodo’s certificate was signed by the top level CA, GTE CyberTrust. I hit a bunch of unrelated SSL-protected websites; all of them had their server key signed by the same Thawte certificate, with no intermediates at all. No other root CA, like Verisign or OpenCA, seemed to have signed any cert. Even Verisign’s website was signed by Thawte!

It seemed that my captors generated a new certificate, which signed the certificates of all Web servers that I contacted. As Thawte is a well known CA, they chose this name for their new CA, in the hope that I wouldn’t notice. It looked as though they set it as a trusted CA in Mozilla Firefox, and also added it to my /etc/ssl/certs directory, which meant that it would be trusted by w3m and other text-only SSL tools. It generated the fake server certificate with the exact same name as the real website, too. My captors were certainly thorough.

Just as with the ssh proxy, the SSL proxy must have acted as a man-in-the-middle. In this case, they didn't even need to fake fingerprints: they just generated a key (caching it for later use, presumably) and signed it with their CA key, which they forcibly made trusted on my desktop, so that it always looked legitimate.

So here I am, I thought, well and truly monitored. Crap. Well, time to look at this traffic dump they've got for me.

The email they sent provided me with the location of an ftp site, which hosted the tcpdump logs. It was approximately two gigabytes worth of data, gathered from ten different machines. I pulled up each file in a different window of Ethereal, the slickest packet analyzer out there.

I could see why they thought the machine here served as the controller: each machine in the dumps talked to two or three other machines, but one of Charles' hosts here communicated with all of them. The communication that originated from the apartment was infrequent, but it seemed to set off a lot of communication between the other nodes. The traffic all occurred in what appeared to be standard IRC protocol.

I looked at the actual content inside the IRC data, but it was gibberish. Encrypted, certainly. I caught the occasional cleartext string that looked like an IP address, but these IPs were not being contacted by the slave machines, at least not according to these logs.

The most confusing part was that the traffic appeared almost completely unidirectional: the master sent commands to the slaves, and they acknowledged that the command was received, but they never communicated back to the master. Perhaps they were attacking or analyzing other hosts, and saving the data locally. If that was what was going on, I couldn’t see it from these dumps. But a command from the server certainly triggered a lot of communication between the slave nodes.

I needed to ask them more about this, so I created two instant messaging accounts and started a conversation between them, figuring that my owners would be watching. I didn’t feel like talking to them on the phone. Unsurprisingly, and annoyingly, they answered me right away.

-> Hey, about these logs, all I see is the IRC traffic. What's missing? What else are these boxes doing? Who are they attacking? Where did you capture these dumps from? Do I have everything here?

<- The traffic was captured at the next hop. It contains all traffic.

-> All traffic? What about the attacks they're coordinating? Or the ssh traffic? Anything?

<- The dumps contain all the traffic. We did not miss anything. Deal.

Now they were getting pissy; great.

I returned to analyzing the data. Extracting the data segment of each packet, I couldn’t see anything helpful. I stayed up until 3am, feeling sick because of my inability to make any headway on the data, drinking too much Pibb, and eating that damned pizza. I sat in Charles’ chair with my laptop for a while, and tried to stay in the mood. I knocked over the two liter bottle onto his keyboard -- thank goodness the cap was closed -- and decided that it was too late for me to use my brain. I needed some rest, and hoped that everything would make more sense in the morning.

Suffice it to say, my dreams were not pleasant.

I woke in the morning, showered (which deviated from the “live in Charles' shoes” model, but I’ve got standards!), and got back to the network traffic dumps.

For several hours I continued to pore over the communications. I dumped out all the data and tried various cryptographic techniques to analyze it. There were no appreciable repeating patterns, the characters seemed evenly distributed, and the full 0-255 ASCII range was represented. In short, it all looked as though it had been encrypted with a strong cipher. I didn't think I would get anywhere with the data.

The thing that continued to bug me was that these machines were talking over IRC and nothing else. Perhaps there were attacks occurring, or they were sharing information. I messaged my captors again:

-> What was running on the machines? Were they writing to disk? Anything there that helps?

<- The machines seemed to be standard webservers, administered by folks without any security knowledge. Our forensics indicate that he compromised the machines, patched them up, turned off the original services, and ran a single daemon that is not present on the hard drive -- when they were rebooted, the machines did not have any communication seen previously.

-> Is there nothing? Just this traffic? Why do you think this is related to the data that is here?

<- The data we're looking for was stored on 102.292.28.10, which is one of the units in your dump logs. We have no proof of it being received back at his home systems, but in previous cases where he has acquired data that was lost from off site servers, he was able to recover it from backups, presumably here.

I still don't see how that could be: the servers here would send packets to the remote machines, but they did not receive any data from them, save the ACK packets.

Actually, that might be it, I thought: Could Charles be hiding data in the ACKs themselves? If he put data inside otherwise unused bits in the TCP headers themselves, he could slowly accumulate the bits and reassemble them.

So, rather than analyzing the data segments, I looked at bits in the ACKs, and applied more cryptanalysis. A headache started. Another damned pizza showed up at the door, and I snacked on it, my stomach turning all the while.

I came to the conclusion that I absolutely hated IRC. It was the stupidest protocol in the world. I've never been a fan of dual channel protocols -- they're not clean, they're harder to firewall, and they just annoy me. What really surprised me was that Charles was using it: he always professed a hatred of it, too.

At that point, I realized this was insane. There was no way he'd have written this for actual communications. Given the small number of ACK packets being sent, it couldn’t possibly be transferring data back here at a decent rate. The outbound commands did trigger something, but it seemed completely nonsensical. I refused to believe this was anything but a red herring, a practical joke, a way to force someone -- me, in this case -- to waste time. I needed to take a different tack.

Okay, I thought, let's look at something more direct. There's got to be a way to get in. Think like him.

Charles obsessed about not losing anything. He had boatloads of disk space in the closet, so he could keep a month or two of backups from his numerous remote systems. He didn’t want to lose anything. I didn’t see why he would allow himself to be locked out of what he had in the apartment. When he was out and about, he must have had remote access.

He kept everything in his head. In a pinch, without his desktop tools, without his laptop, he'd have a way to get in. Maybe not if he were stuck on a Windows box, but if he had vanilla user shell access on a UNIX box, he'd be able to do whatever was necessary to get in here. And that meant a little obfuscation and trickery, plus a boatload of passwords and secrets.

Forget this IRC bullshit, I thought, I bet he's got ssh access, one way or another.

I performed a portscan from vulture -- after all, I'm still logged in -- on the whole IP range. Almost everything was filtered. Filtering always makes things take longer, which is a royal pain. I ate some more pizza -- I had to get in his head, you know.

I considered port knocking, a method wherein packets sent to predetermined ports will trigger a relaxation of firewall rules. This would allow Charles to open up access to the ssh port from an otherwise untrusted host. I doubted that he would use port knocking: either he'd need to memorize a boatload of ports and manually connect to them all, or he'd want a tool that included crypto as part of the port-choosing process. I didn't think he would want either of those: they were known systems, not home-grown. Certainly he'd never stand for downloading someone else's code in order to get emergency access into his boxes. Writing his own code on the fly was one thing; using someone else's was anathema.

Port scans came up with one open port, 8741. Nothing I'd heard of lived on that port. I ran nmap -sV, nmap’s Version fingerprinting, which works like OS fingerprinting, but for network services. It came up with zilch. The TCP three-way handshake succeeded, but as soon as I sent data to the port, it sent back a RST (reset) and closed the connection.

This was his last ditch back door. It had to be.

I wrote a Perl script to see what response I could get from the back door. My script connected, sent a single 0 byte (0x00), and printed out any response. Next, it would reconnect, send a single 1 byte (0x01), and print any response. Once it got up to 255 (0xFF), it would start sending two byte sequences: 0x00 0x00, 0x00 0x01, 0x00 0x02, and so on. Rinse, lather repeat.

Unfortunately, I wasn’t getting anything from the socket at all. My plan was to enumerate every possible string from 1 to 20 or so bytes. Watching the debug output, it became clear that this was not feasible: there are 2^(8*20) different strings with twenty characters in them. That number is approximately equal to 1 with 49 zeros behind it (and we're talking decimal decimal, not binary). If I limited my tests to just lower case letters, which have less than 5 bits of entropy, instead of 8 bits like an entire byte, that would still be 2^(5*20), which is a 32-digit number. I realized that there was no way could I get even close to trying them all; I didn't know what I had been thinking.

So, instead of trying to hit all strings, I just sent in variations of my /usr/share/dict/words file, which contained about 100,000 English words, as well as a bunch of combinations of two words from the file. While it ran, I took the opportunity to emulate my favorite hacker/cracker for a while, surfing Groklaw with my right hand and munching on the revolting pizza, which I held in my left hand. Reading the latest SCO stories always brought a bit of reality back for me.

My brute force attempt using /usr/share/dict/words finally completed. Total bytes received from Charles' host: zilch, zero, nothing. Was this another thing he left to annoy people? A tripwire that, once hit, automatically added the offender to a block list for any actual services? Had I completely wasted my time?

I decided to look at the dumps in Ethereal, in case I was wrong and there had been data sent by his server that I hadn't been reading correctly. Looking at the dumps, which were extremely large, I noticed something odd.

First, I wasn't smoking crack: the server never sent back any data, it just closed the connection. However, it closed the connection in two distinct ways. The most common disconnect occurred when the server sent me a RST packet. This was the equivalent of saying “This connection is closed, don't send me anything at all any more in it, I don't even care if you get this packet, so don't bother letting me know you got it.” A RST is a rude way of closing a connection, because the system never verifies that the other machine got the RST; that host may think the connection is still open.

The infrequent connection close I saw in the packet dumps was a normal TCP teardown: the server sent a FIN|ACK, and waited for the peer to acknowledge, resending the FIN|ACK if necessary. This polite teardown is more akin to saying “I’m shutting down this connection, can you please confirm that you heard me?”

I couldn’t think of a normal reason this would occur, so I investigated. It seemed that every connection I established that sent either 1 or 8 data characters received the polite teardown.

All packets that are sending one or eight character strings are being shut down politely, regardless of the data contained in them. So, rather than worrying about the actual data, I tried sending random packets of 1-500 bytes. The string lengths 1, 8, 27, 64, 125, 216, 343, were all met with polite TCP/IP teardown, and the rest were shut down with RST packets.

Now I knew I was on to something. He was playing number games. All the connections with proper TCP shutdown had data lengths that were cubes! 1^3, 2^3, 3^3, and so on. I had been thinking about my data length, but more likely Charles had something that sent resets when incoming packet lengths weren't on his approved list. I vaguely remember a '--length' option for iptables -- maybe he used that. More likely he patched his kernel for it, just because he could.

I get out my bible, W. Richard Stevens' TCP/IP Illustrated. Add the Ethernet and TCP headers together, and you will get 54 bytes. Any packet being sent from a client will have some TCP options, such as a timestamp, maximum segment size, windowing, and so on. These are typically 12 or 20 bytes long from the client, raising the effective minimum size to 66 bytes; that's without actually sending any data in the packet.

For every byte of data, you add one more byte to the total frame. Charles had something in his kernel that blocked any packets that weren't 66 + (x^3) bytes long.

If I could control the amount of data sent in any packet, I could be sure to send packets that wouldn’t reset the connection. Every decent programming language has a ‘send immediately, without buffering’ option. Unix has the write(2) system call, for example, and Perl calls that via syswrite. But what about packets sent by the client's kernel itself? I never manually sent SYN|ACK packets at connection initiation time; that was the kernel's job.

Again, Stevens at the ready, I saw that the 66 + (x^3) rule already handled this. A lone ACK, without any other data, would be exactly 66 bytes long -- in other words, x == 0. A SYN packet was always 74 characters long -- x==2. Everything else could be controlled by using as many packets with one data byte as necessary. A user space tool that intercepted incoming data and broke it up into the right chunks would be able to work on any random computer, without any alterations to its TCP/IP stack.

This is too mathematical -- a sick and twisted mind might say elegant -- to be coincidence. I drew up a chart.

Charles’ Acceptable Packet Lengths

| Data length | Data length significance | Total Ethernet Packet Length | Special matching packets |

|---|---|---|---|

| 0 | 0 cubed | 66 | ACK packets (ACK, RST|ACK, FIN|ACK) |

| 1 | 1 cubed | 67 | |

| 8 | 2 cubed | 74 | SYN (connection initiation) packets. |

| 27 | 3 cubed | 93 | |

| 64 | 4 cubed | 130 | |

| 125 | 5 cubed | 191 | |

| 216 | 6 cubed | 282 | |

| 343 | 7 cubed | 409 |

Where he came up with the idea for this shit, I didn’t know. But I was feeling good: this had his signature all over it. This was a number game that he could remember, and software he could recreate in a time of need.

I needed to write a proxy that would break up data I sent into packets of appropriate size. The ACKs created by my stack would automatically be accepted; no worries there.

Still, I felt certain this was an ssh server, but I realized that an ssh server should be sending a banner to my client socket, and this connection never sent anything.

Unless he's obfuscating again, I thought.

I realized that I needed to whip up a Perl script, which would read in as much data as it could, and then send out the data in acceptably-sized chunks. I could have my ssh client connect to it using a ProxyCommand. After a bit of writing, I came up with something:

desktop$ cat chunkssh.pl

#!/usr/bin/perl

use warnings;

use strict;

use IO::Socket;

my $debug = shift @ARGV if $ARGV[0] eq '-d';

my $ssh_server = shift @ARGV;

die "Usage: $0 ip.ad.dr.es\n" unless $ssh_server and

not @ARGV;

my $ssh_socket = IO::Socket::INET->new(

Proto => "tcp",

PeerAddr => $ssh_server,

PeerPort => 22,

) or die "cannot connect to $ssh_server\n";

# The data 'chunk' sizes that are allowed by Charles' kernel

my @sendable = qw( 1331 1000 729 512 343 216 125 64 27 8 1 0);

# Parent will read from SSH server, and send to STDOUT,

# the SSH client process.

if ( fork ) {

my $data;

while ( 1 ) {

my $bytes_read = sysread $ssh_socket, $data, 9999;

if ( not $bytes_read ) {

warn "No more data from ssh server -

exiting.\n";

exit 0;

}

syswrite STDOUT, $data, $bytes_read;

}

# Child will read from STDIN, the SSH client process, and

# send to the SSH server socket only in appropriately-sized

# chunks. Will write chunk sizes to STDERR to prove it's working.

} else {

while ( 1 ) {

my $data;

# Read in as much as I can send in a chunk

my $bytes_left = sysread STDIN, $data, 625;

# Exit if the connection has closed.

if ( not $bytes_left ) {

warn "No more data from client -

exiting.\n" if $debug;

exit 0;

}

# Find biggest chunk we can send, send as many of them

# as we can.

for my $index ( 0..@sendable ) {

while ( 1 ) {

if ( $bytes_left >= $sendable[$index] ) {

my $send_bytes = $sendable[$index];

warn "Sending $send_bytes bytes\n"

if $debug;

syswrite $ssh_socket, $data, $send_bytes;

# Chop off our string

substr($data,0,$send_bytes,'');

$bytes_left -= $send_bytes;

} else {

last; # Let's try a different chunk size

}

}

last unless $bytes_left;

}

}

I ran it against my local machine to see if it was generating the right packet data sizes:

desktop$ ssh -o "proxycommand chunkssh.pl -d %h" 127.0.0.1 'cat /etc/motd' Sending 216 bytes Sending 216 bytes Sending 64 bytes Sending 8 bytes Sending 8 bytes Sending 8 bytes Sending 1 bytes ... Sending 27 bytes Sending 1 bytes ################################### ## ## ## Glenn's Desk. Go Away... ## ## ## ################################### Sending 8 bytes sending 1 bytes No more data from client - exiting.

I used an SSH ProxyCommand, via the -o flag. This told /usr/bin/ssh to run the chunkssh.pl program, rather than actually initiate a TCP connection to the ssh server. My script connected to the actual ssh server, getting the IP address from the %h macro, and shuttled data back and forth. A ProxyCommand could do anything, for example routing through an HTTP tunnel, bouncing off an intermediate ssh server, you name it. All I had here was something to send data to the server only in predetermined packet lengths.

So, with debug on, I saw all the byte counts being sent, and they adhered to the values I had reverse engineered. Without debug on, I would just see a normal ssh session.

I've still got the slight problem that the server isn't sending a normal ssh banner - usually the server sends its version number when you connect:

desktop$ nc localhost 22 SSH-2.0-OpenSSH_3.8.1p1 Debian-8.sarge.4

My Perl script needed to output an ssh banner for my client. I didn't know what ssh daemon version Charles ran, but recent OpenSSH servers were all close enough that I hoped it wouldn’t matter. I added the following line to my code to present a faked ssh banner to my /usr/bin/ssh client:

if ( fork ) {

my $data;

while ( 1 ) {

print "SSH-1.99-OpenSSH_3.8.1p1 Debian-8.sarge.4\n";

my $bytes_read = sysread $ssh_socket, $data, 9999;

...

That would advertise the server as supporting SSH protocol 1 and 2 for maximum compatibility. Now, it was time to see if I was right -- if this was indeed an ssh server:

desktop$ ssh -v -lroot -o "ProxyCommand chunkssh.pl %h" 198.285.22.10 debug1: Reading configuration data /etc/ssh/ssh_config debug1: Applying options for * ... debug1: SSH2_MSG_KEXINIT received debug1: kex: server->client aes128-cbc hmac-md5 none debug1: kex: client->server aes128-cbc hmac-md5 none debug1: SSH2_MSG_KEX_DH_GEX_REQUEST(1024<1024<8192) sent debug1: expecting SSH2_MSG_KEX_DH_GEX_GROUP debug1: SSH2_MSG_KEX_DH_GEX_INIT sent debug1: expecting SSH2_MSG_KEX_DH_GEX_REPLY [email protected]'s password:

Yes!

I had only one problem: what the hell was his password? And did he use root, or a user account? I could have brute-forced this all year without any luck. I needed to call on someone with more resources.

-> "I need his username and password."

<- "Yes, we see you made great progress. We don't have his passwords though. If we did, we'd take it from here."

-> "You're completely up to speed on my progress? I haven't even told you what I've done! Are you monitoring from the network? Have you seen him use this before? Give me something to work with here!"

<- "We told you we're monitoring everything. Here's what we do have. Uploaded to the same ftp site are results from a keystroke logger installed on his system. Unfortunately, he's found some way to encrypt the data."

-> "No way could you have broken into his computer and installed a software keystroke logger. That means you've installed hardware. But he checks the keyboard cables most every time he comes in - if you'd installed Keyghost or something, he'd have noticed -- it's small, but it's noticeable to someone with his paranoia. No way."

<- "Would you like the files or not? "

-> "Yeah, fuck you too, and send them over."

I was getting more hostile, and I knew that was not good. There was no way could they have installed a hardware logger on his keyboard: those things are discreet, but if you knew what you're looking for, it was easy to see them. I wouldn't have been surprised if he had something that detected when the keyboard was unplugged to defeat that attack vector. I download the logs...

01/21 23:43:10 x 01/21 23:43:10 8 1p2g1lfgj23g2/ [cio 01/21 23:43:11 ,uFeRW95@694:l|ItwXn 01/21 23:43:13 cc 01/21 23:43:13 x ggg 01/21 23:43:13 o. x,9a [ F | 8 [email protected] -x7o Goe9- 01/21 23:43:14 a g [n7wq rysv7.q[,q.r{b7ouqno [b.uno 01/21 23:43:15 .w U 6yscz h7,q 8oybbqz cyne 7eyg 01/21 23:43:19 qxhy oh7nd8 ay. cu8oqnuneg

The text was completely garbled. It included timestamps, which was helpful. Actually, it was rather frightening: they had been monitoring him for the last two months. More interesting was the fact that the latest entries were from that morning. When I knocked over the pop bottle on his keyboard.

Hardware keyloggers, at least the ones I was familiar with, had a magic password: go into an editor and type the password, then it will dump out its contents. But you needed to have the logger inline with the keyboard for it to work. If they retrieved the keylogger while I slept, I was sleeping more soundly than I'd thought.

Or perhaps it was still there. I went under the desk and looked around, but the keyboard cable was completely normal, with nothing attached to it. But how else could they have seen my klutz maneuver last night? Did someone make a wireless keylogger? I had no idea. How would I know?

They’d been monitoring for two months, from the looks of it. On a hunch, I went to our MRTG graphs. Charles was obsessed with his bandwidth (though I was sure he didn’t pay for it), so he liked to take measurements via SNMP and have MRTG graph traffic usage. One of the devices he monitored was the wireless AP he built for the apartment. He only used it for surfing Slashdot while watching Sci-Fi episodes in the living room. On my laptop, naturally.

Going back to the date when the keystroke logs started, there was a dip of approximately five percent in bandwidth we'd been able to use on the wireless network. Not enough to hurt our wireless performance enough to worry, but I bet the interference was because they were sending keystroke information wirelessly. Probably doing it on my keyboard too. They must have hooked into the keys themselves, somewhere in the keyboard case rather than at the end of the keyboard's cable. I'd never heard of such a device, which made me worry more.

Of course I could just be paranoid again, I thought, but at this point, I'd call that completely justified.

So now the puzzle: if Charles knew about the keystroke logger, why did he leave it there? And if he didn't know, how did manage to encrypt it?

I went over to Charles’ keyboard. His screen was locked, so to the system wouldn’t care about what I typed. I typed the phrase, “Pack my box with five dozen liquor jugs,” the shortest sentence I knew that used all 26 English letters.

-> "Hey, what did I just type on Charles' machine?"

<- "Sounds like you want to embark on a drinking binge, why?"

I didn't bother to answer.

Keyboard keys worked normally when he wasn’t logged in, so whatever he did to encrypt it didn’t occur until he logged in. I bet that these guys tried using the screensaver password to unlock it. They must not have known that you needed to have one of the USB fobs from the drawer, and the one he kept with him. Without them, the screen saver wouldn’t even try to authenticate your password. Another one of his customizations.

Looking at their keystroke log, the keyboard output was all garbled -- but garbled within the printable ASCII range. If it were really encrypted, you would expect there to be an equal probability of any byte from 0 through 255. I ran the output through a simple character counter, and discovered that the letters were not evenly distributed at all!

Ignoring the letters themselves, it almost looked like someone working at a command line. Lots of short words (UNIX commands like ls, cd, and mv?), lots of newlines, spaces about as frequently as I normally had when working in bash.

But that implied a simple substitution cipher, like the good old fashioned ROT-13 cipher, which rotates every letter 13 characters down the alphabet. "A" becomes "M", "B" becomes "N", and so on. If this was a substitution cipher, and I knew the context was going to be lots of shell commands, I could do this.

First, what properties did the shell have? Unlike English, where I would try to figure out common short words like "a," "on," and "the," I knew that I should look for Linux command names at the beginning of lines. And commands take arguments, which meant I should be able to quickly identify the dash character: it would be used once or twice at the beginning of many 'words' in the output, as in -v or --debug. Instead of looking for "I" and "a" as single-character English words, I hoped to be able to find the "|" between commands, to pipe output of one program into the other, and "&" at the end to put commands in the background.

Time for some more pizza, I thought; this stuff grows on you.

Resting there, pizza in the left hand, right hand on the keyboard, I thought: This is how he works. He uses two hands no more than half the time. He's either holding food, on the phone, or turning the pages of a technical book with his left hand.

Typing one handed.

One handed typing.

It couldn't be that simple.

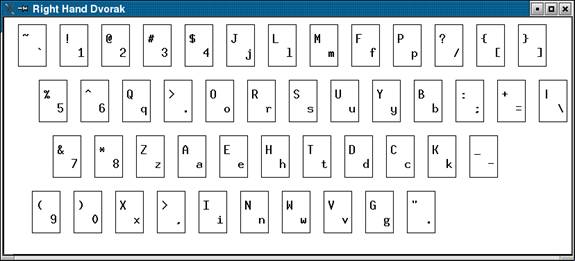

I went back to my machine, and opened up a new xterm. I set the "secure keyboard" option, so no standard X11 hacks could see my keystrokes. I took quite some time to copy and paste the command setxkbmap Dvorak-r, so as to avoid using the keyboard itself. I prefixed it with a space, to make sure it wouldn't enter my command history. This was all probably futile, but I thought I was on the home stretch, and I didn’t want to give that fact away to my jailers. They may have been able to see my Hushmail email that first night, even when I copied and pasted, but that was because the email went across the network, which was compromised. These cut/paste characters were never leaving my machine, so I figured they shouldn't be able to figure out what I was doing.

I picked a line that read, “ o. x,9a [ F | 8 [email protected] -x7o Goe9-” -- It looked like an average-sized command. I typed on my keyboard, which had the letters in the standard QWERTY locations. As I did so, my new X11 keyboard mapping, set via the setxkbmap command, translated them to the right-handed Dvorak keyboard layout. On my screen appeared an intelligible UNIX command:

tr cvzf - * | s cb@cracked 'cat >tgz'

No encryption at all. Charles wasn’t using a QWERTY keyboard. The keystroke logger logged the actual keyboard keys, but he had them re-mapped in software.

The Dvorak keyboard layouts, unlike the QWERTY layouts, were built to be faster and easier on the hands: no stretching to reach common letters, which were located on the home row. The left and right handed Dvorak layouts were for individuals with only one hand: a modification of Dvorak that tried to put all the most important keys under that hand. You would need to stretch a long way to get to the percent key, but your alphabetic characters were right under your fingers. I'd known a lot of geeks who've switched to Dvorak to save their wrists -- carpel tunnel is a bad way to end a career -- but never knew anyone with two hands to switch to the single-hand layout. I don't know if Charles did because of his need to multitask with work and food, or for some other reason, but that was the answer. And I certainly didn’t want to think what he'd be doing with his free hand if he didn't have food in it. But it was too late: that image was in my mind.

Dvorak Keyboard

One of the things that probably defeated most of the 'decryption' attempts is that he seemed to have a boatload of aliases. Common UNIX commands like cd, tar, ssh, and find were shortened to c, tr, s, and f somewhere in his .bashrc or equivalent. Man, Charles was either efficient or extremely lazy. Probably both.

Now I was stuck with an ethical dilemma. I knew I could look through that log and find a screen saver password; it would be a very long string, typed after a long period of inactivity. That would get me into his desktop, which might have ssh keys in memory. Sometime in the last two months, he must have typed the password to some of the closet servers, and now I had the secret to his ssh security.

I got this far because I'd known Charles a long time. Knew how he thought, how he worked. Now I was faced with how much I didn't know him.

I had been so focused on getting into these machines that I didn't think about what I'd do once I got here. What did Charles have stashed away in there? Were these guys the good guys or the bad guys? And what will they do if I helped them, or stop them?

I couldn’t sit there, cutting and pasting letters all evening so they couldn’t see my keystrokes. They would get suspicious. Actually, that wouldn't work anyway: I would need to type on the keyboard to translate the logs. I would need to download a picture of the layout, and then they'd see me doing so, and know what I'd discovered.

Charles, I thought, I wish I know what the hell you've gotten me into.

The End

If you enjoyed this chapter, you may enjoy the other nine story chapters. My next-favorite chapter is Tom Parker's Ch9 (especially the BlueTooth hacking details). Many of the others are great as well. Here is the Table of Contents. You can buy the whole book at Amazon and save $14. You might also enjoy Return on Investment, Fyodor's free chapter from Stealing the Network: How to Own a Continent.